Hey! I am a graduate student at Northeastern University pursuing my MS in Robotics. I am genuinely interested in the applications of robotics in our day-to-day lives. I am broadly interested in robust sensor fusion for safe autonomy.

I am currently a researcher at the Robust Autonomy Lab

advised by Prof. David Rosen.

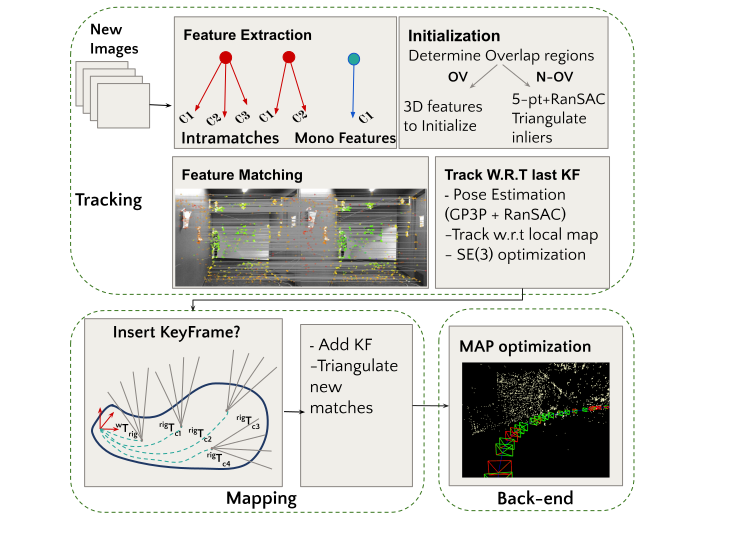

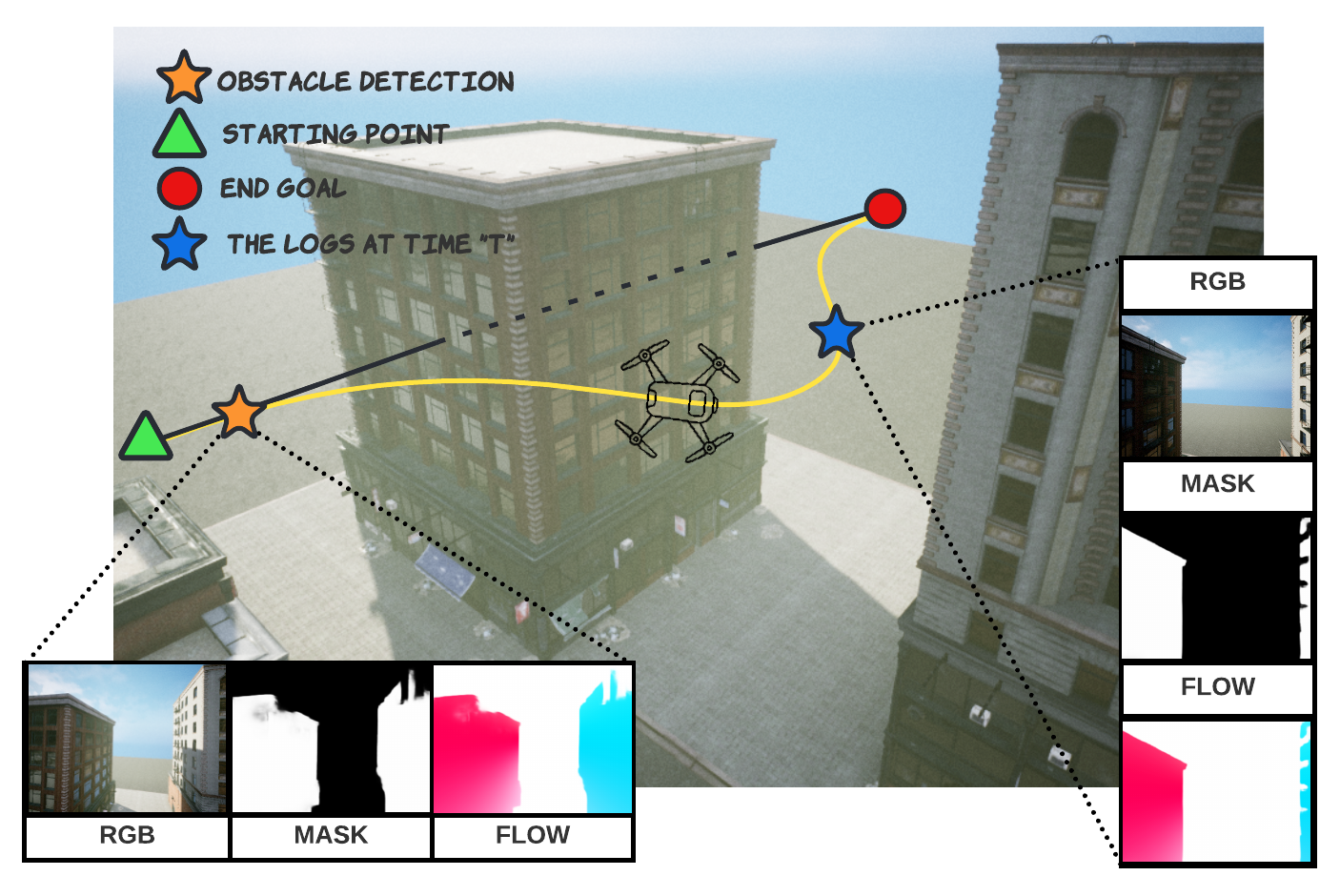

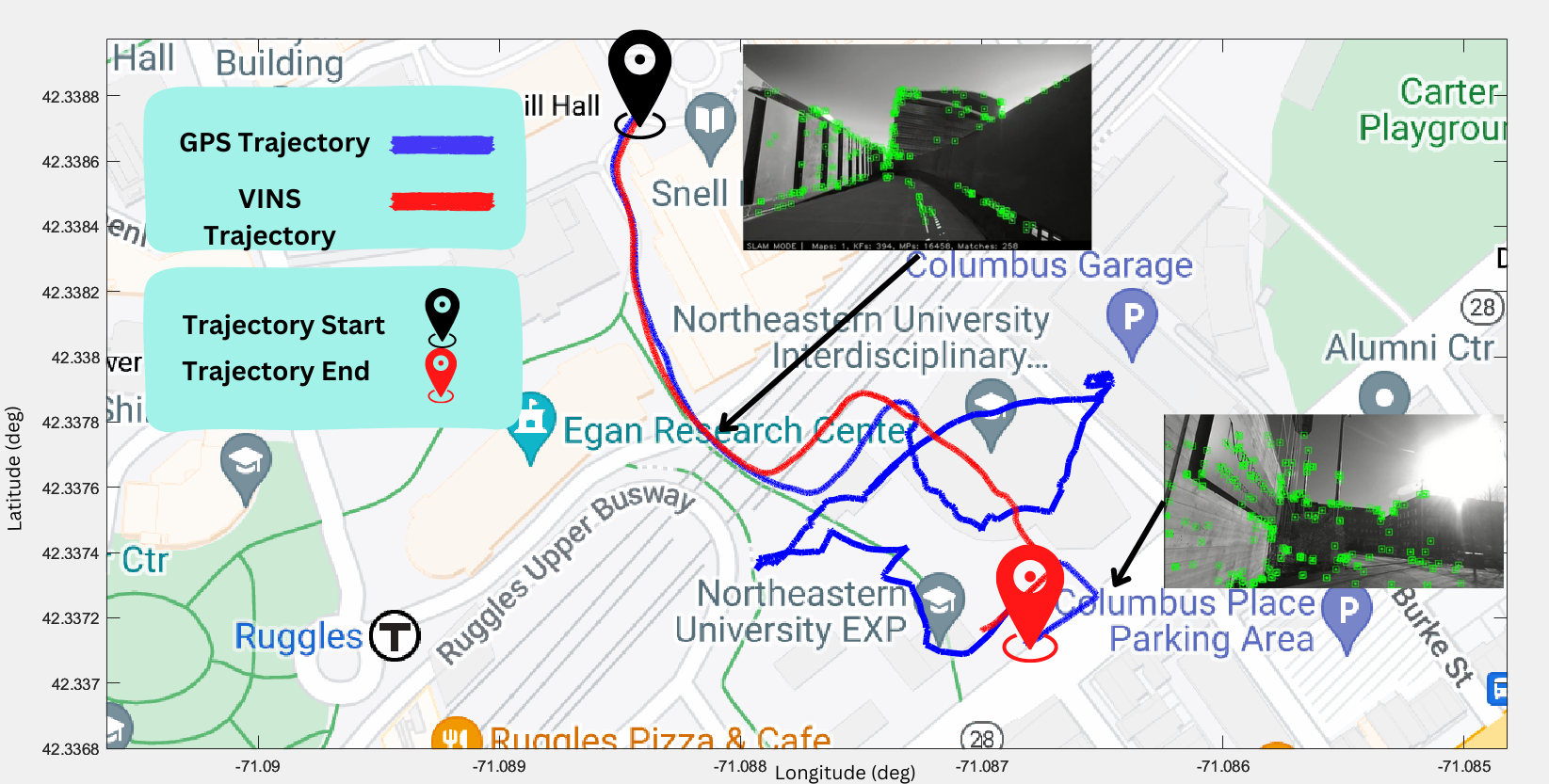

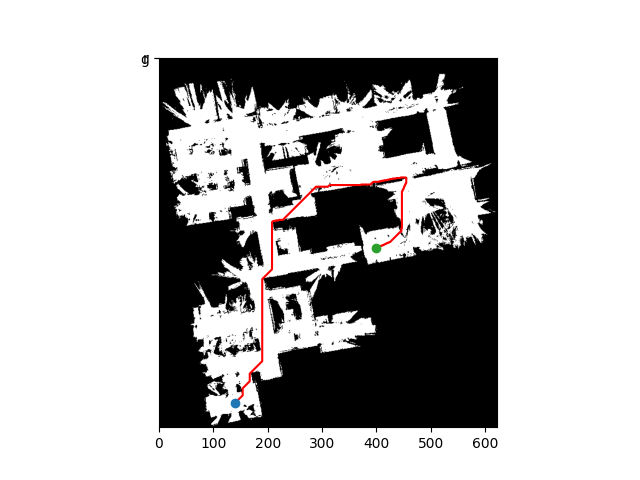

My work in the lab focuses on visual-inertial navigation systems for high-speed autonomous navigation. My ongoing projects include IMU Preintegration for Real-Time Visual-Inertial Odometry, inspired by the work of Forster et al.,

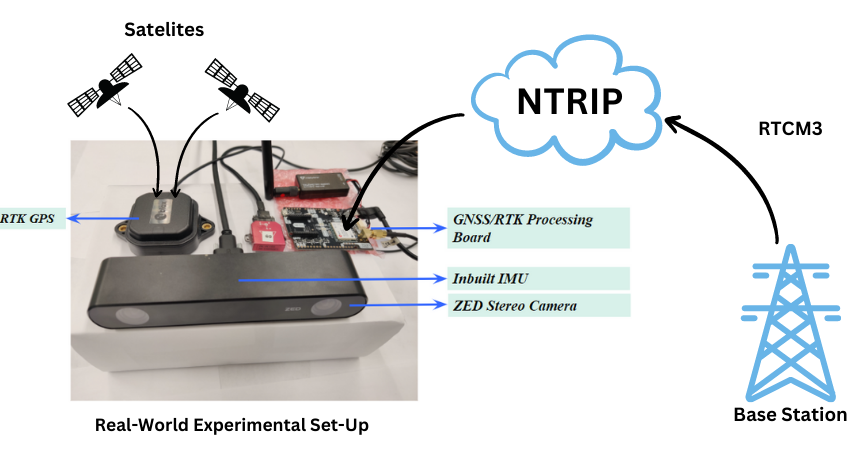

and GPS integration with a multi-camera SLAM system for global-aware localization tasks.

I graduated with a B.E. in Mechanical Engineering from the Birla Institute of Technology and Science, Pilani (2021).

Prior to joining Northeastern, I was a Research Assistant at the Robotics Research Center at IIIT Hyderabad under the guidance of Prof. Madhava Krishna.

At BITS Pilani, I was a part of the CRIS lab advised by Prof. B.K Rout where our team Sally Robotics worked on

autonomous driving. As the team leader in my third year, I had the opportunity to guide and collaborate with an

incredible team of 23 researchers. During my undergrad, I gained valuable experience with internships at

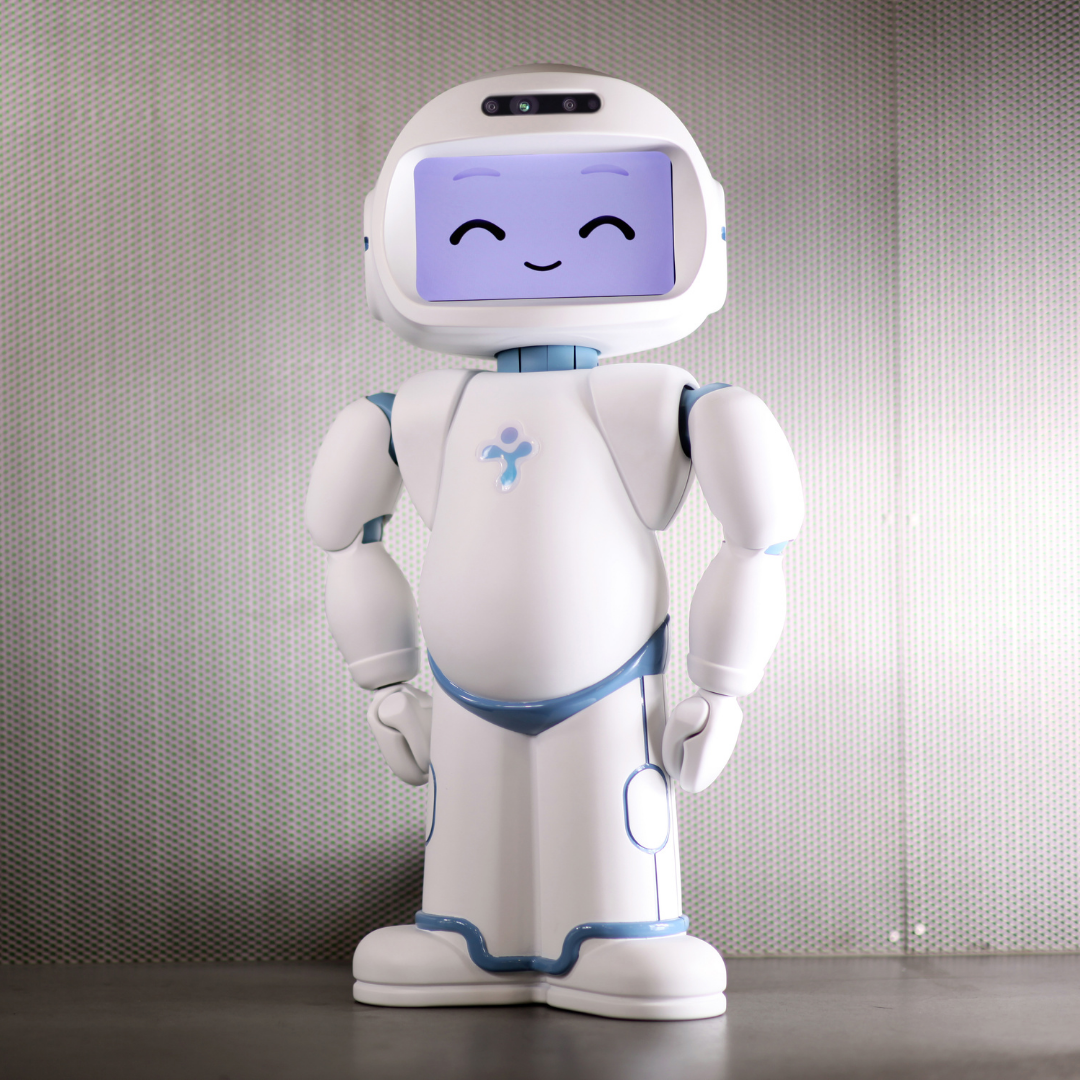

Invento Robotics working on autonomous navigation pipelines for mobile robots and at

Daimler India Commercial Vehicles developing automatic inspection devices in the Engine assembly line.

I am grateful to have wonderful mentors and collaborators who have helped me grow as a researcher and as a person including

Prof. David Rosen, Prof. Madhava Krishna,

Prof. B.K Rout, Dr Pushyami Kaveti,

Aditya Agarwal, Bipasha Sen,

Vishal Reddy Mandadi, M Nomaan Qureshi,

Raushan Kumar, Dr Balaji Viswanathan,

and other awesome robotists!